A perfect data model, well designed and fast as an in-memory Power BI dataset, as a basis for standard and new self-service reports. The refresh for current data is carried out at night.

But now you would also like to input other small data, e.g. Comments or a status is changed, this data goes back to the data warehouse, e.g. with PowerApps - but unfortunately we won't see them in the report until tomorrow ...

Of course, the user wants to see the data immediately!

There is a very simple solution for this: the refreshes in the Power BI Service ...

But unfortunately only 8 times a day, with Premium at least 48.

So another solution is needed!

Inhalt

Refresh with REST API

The refresh can also be triggered via an API and thus at a controlled point in time in a sequential overall process, typically as the last step in a loading chain.

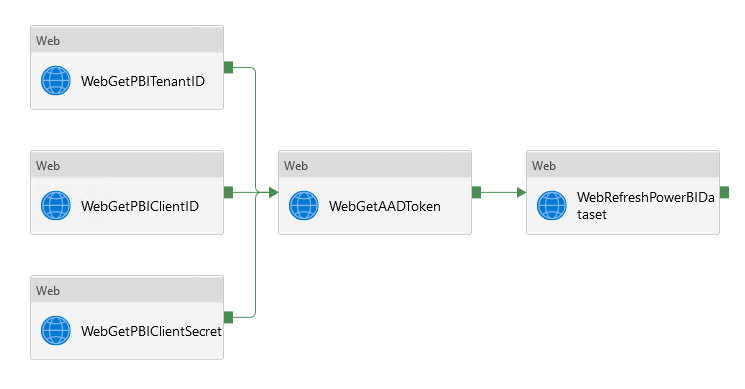

It's not that simple in the setup either, but works well with the Azure Datafactory with a service principal and looks something like this:

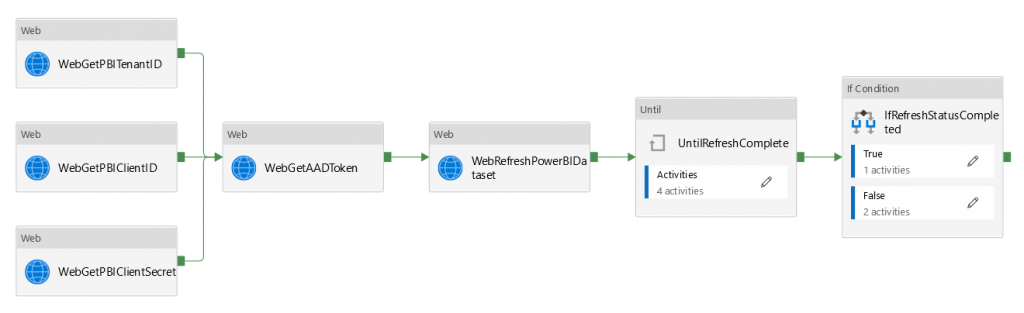

In the luxury version, the status and error are also queried with a loop, since this web call runs asynchronously.

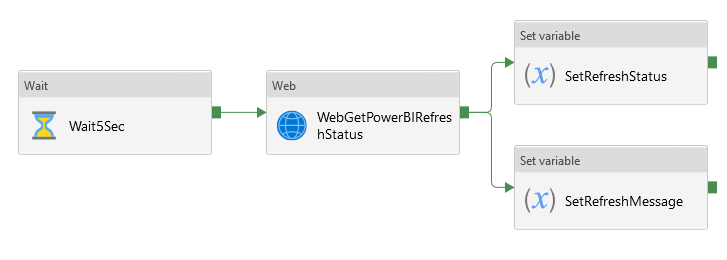

Every 5 seconds it is checked whether the refresh is finished or an error is logged:

Naive and lazy as I am, I want to use this method to refresh a single table.

Das Refresh vom ganzen Modell mit 100 Tabellen und zahllosen Relations würde 40 Minuten dauern, obwohl sich die Daten nicht geändert haben, also möchte ich nur die Eingabetabelle der Benutzer processen, was in 3 Minuten fertig ist.

But I am disappointed ...

Unfortunately there is no table refresh in the API!

What AAS is providing, PBI can not handle, so a new approach is necessary.

Refresh with Power Shell

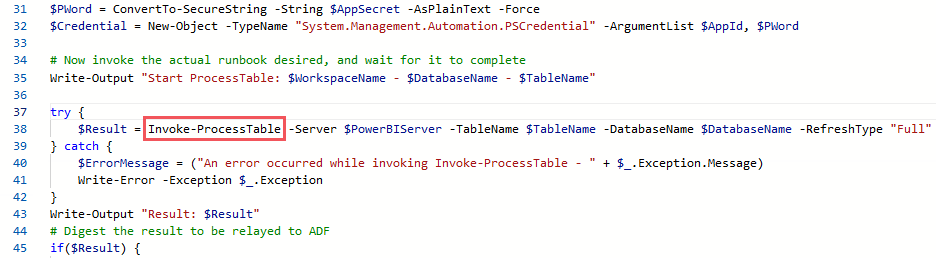

The Power Shell cmdlet for AS can also be used for Power BI to process a single table.

If, as a programming grouch, I have to program for a standard task, then I imagine that at least I can simply call the script in the data factory, but here, too, I am disappointed, because that doesn't exist.

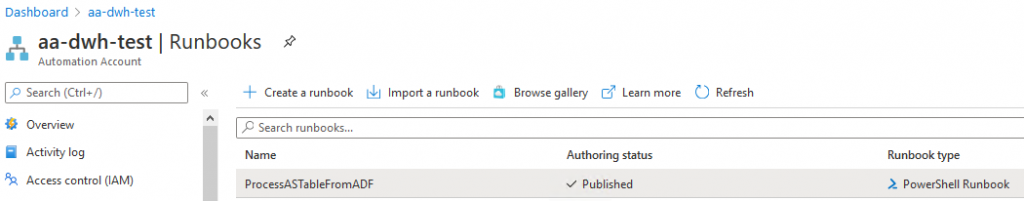

So the power shell script goes to an automation account.

The Power Shell is started via a web call in the automation account.

Data Factory call of the runbook

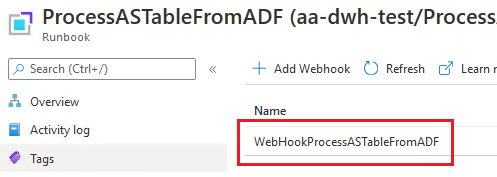

A webhook from the runbook is required for the call; the runbook can then be started with this url.

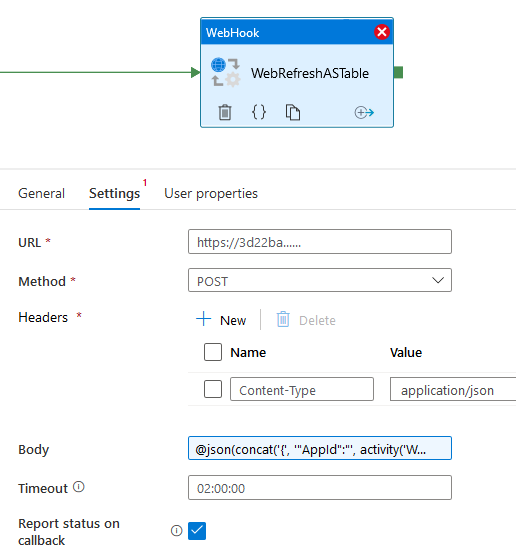

In the data factory you only need this web call to start the runbook with the Powershell.

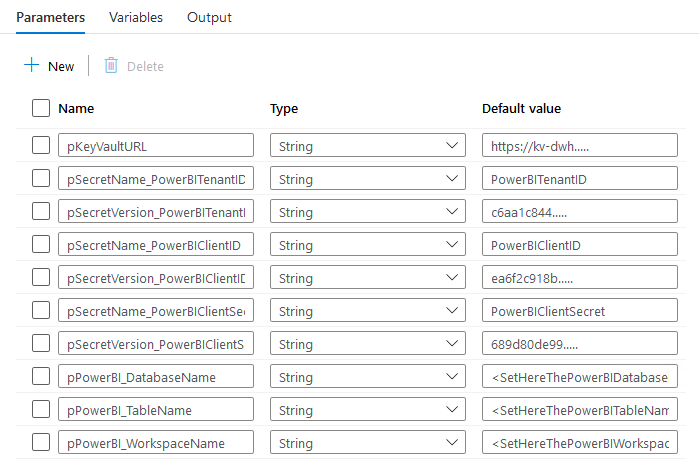

The parameters for the database, table, etc. are set and credentials are determined using a KeyVault.

The necessary parameters are transferred to the runbook as a complete JSON body and decoded there again.

The normal web call is asynchronous, thats why the webhook is used so that it waits until the refresh is finished.

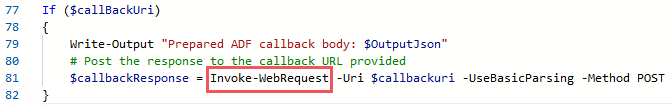

Das funktioniert aber nur, wenn callback gesetzt ist, aber auch im Power Shell der Callback explizit aufgerufen wird als letzter Schritt!

Time control

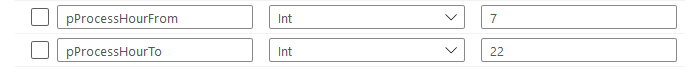

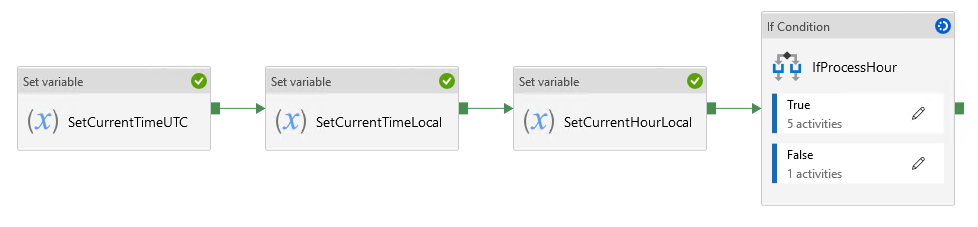

The user entries are only relevant during the day and the table refresh shouldn't get in the way of the daily load, so there is an additional parameter control to determine whether the refresh should be carried out, depending on the time.

Trigger for constant refresh

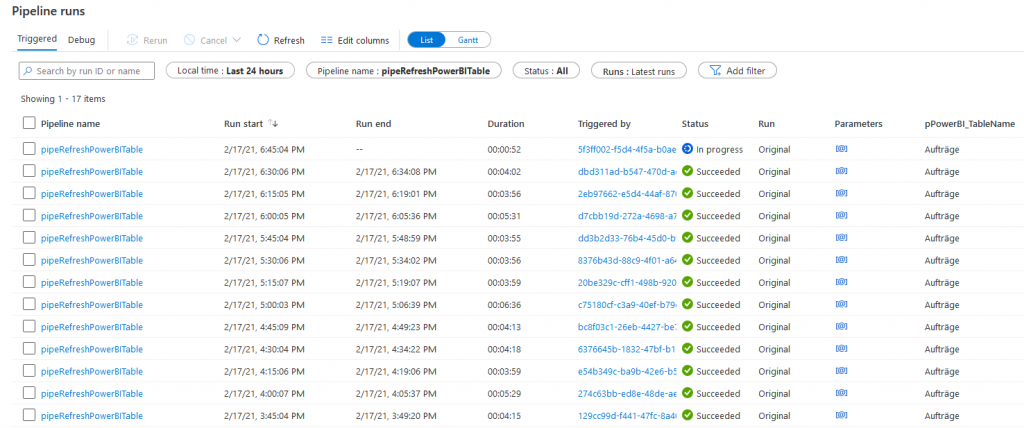

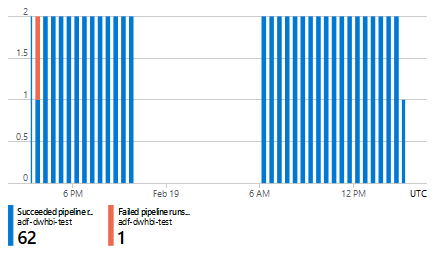

To ensure that the table refresh is updated as often as possible, a trigger is fired every 15 minutes that executes the pipeline.

A metric shows the runs with the break in the night.

Summary

The solution for a table refresh in Power BI looks like this:

- Setup for App Registration, Service Principal, KeyVault access

- Power Shell Script for the table refresh with parameters

- Runbook with the Power Shell Script with a WebHook

- Data Factory fetches credentials via a KeyVault and executes the runbook via a WebHook

- A scheduled trigger regularly calls the refresh

Conclusion

Refreshing individual tables is actually not intended, but is often desired and useful in practice.

Mit dieser doch komplizierten Lösung aus verschiedenen Komponenten ist dies aber durchaus stabil umsetzbar und sogar möglich, Daten aus dem Power BI Dataset „near realtime“ anzuzeigen.

Share your experience with a comment below!

14. March 2021 at 11:26

Nice setup 👍🏻