Inhalt

Starting position

A long project comes to an end, data sources were connected over many months, a new data warehouse built, analysis models created and reports built - and now the end users can hardly wait to finally get their efficient, modern, fast and interactive reports with the cool Power BI use…

Everything is going well, the loading process makes it through the night, the model is a Power BI Premium Dataset and processes daily, the complex DAX calculations still result in reasonable response times in the report.

Aber was passiert, wenn jetzt 1.000 User gleichzeitig zugreifen?

Will the system collapse?

Verwenden wir lieber doch einen AAS (Azure Analysis Services)?

Brauchen wir einen Premium P2?

So better test before...

But how?

In any case so realistic as possible!

Since there aren't 1,000 users who call up reports like crazy for 2 hours, it will probably have to be an automated test.

What do we want to test?

I have selected three reports that are very intense, not just the amount of data, but many very complex DAX measures in a matrix list.

The aim should be to repeatedly call up this query with the report with different filters, if possible.

We are satisfied with 100 users first, if that works, then with 500-700 users at the same time.

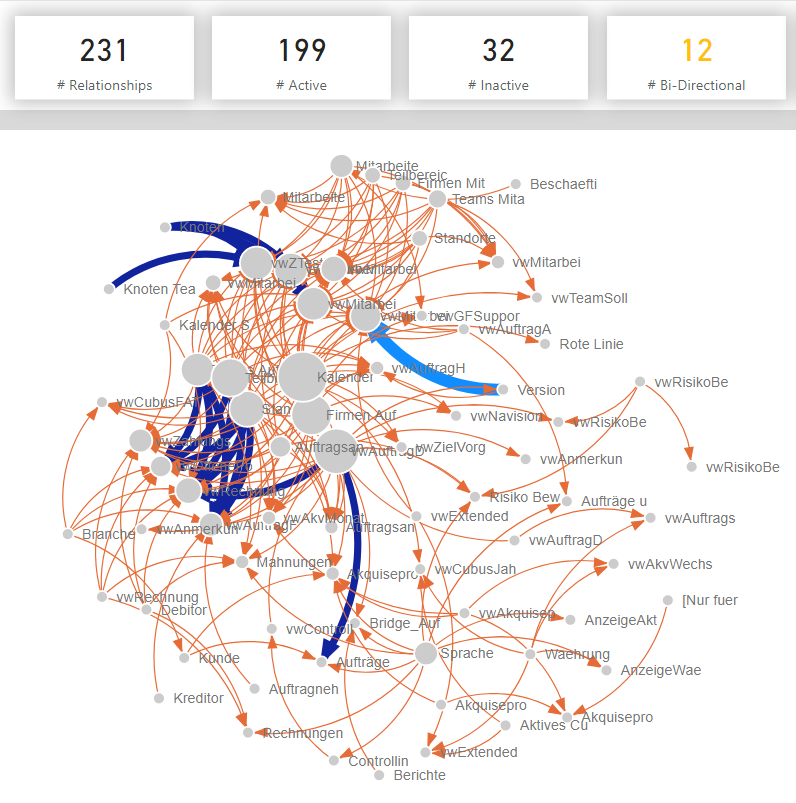

What does the model look like?

The tabular model as a premium dataset is not very large (3 GB), but quite complex.

How can you test that?

Now it gets interesting, because there is no ready-made procedure or even a tool - so there are a number of sometimes complex failures ...

Browser Refresh

Browser Refresh

Simply call up the report over and over again via the browser, change filters, call up again.

That could be done eg. with JavaScript.

But for 100 browser instances or even 500?

That could only go wrong, the client is already at the end with 30 browser windows, ultimately you measure the behavior of the client and not the report and I didn't want to set up 100 VMs either.

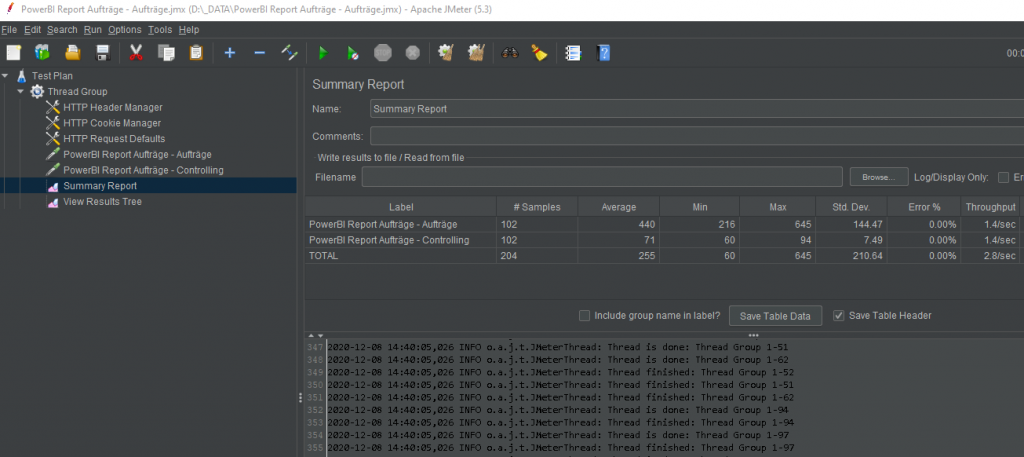

Testing Tool JMeter

Testing Tool JMeter

I was immediately enthusiastic, you can configure everything well, determine what you send, exchange filters, define iterations and also set the parallelization.

But unfortunately only web traffic is measured here, i.e. the query is not triggered at all and neither is the HTML rendering of the report.

So it was proven that you can get 100 times respond in parallel to the report URL and back, not quite as we would like ...

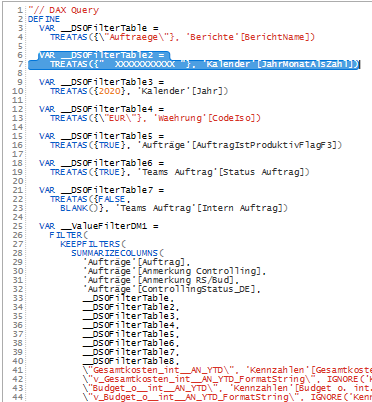

Compromise DAX Query

So I reject the idea of testing the report as a whole visualization and limit myself to the query.

From Power BI, I determine the DAX statement from the complex matrix and break it down into two parts, so that I can always change a filter in between, otherwise for the cache would too easy, I also determine 10 different filter conditions that also bring data.

The test no longer relates to the entire report page, but only to one visual and the rendering time is also omitted - the query accounts for 90% of the loading time.

How do you automatically query a DAX query and receive the result?

PowerShell

It is certainly possible to connect to the Premium Dataset, to work with XMLA and also to send DAX and you can then certainly program loops and also measure the time, the only question remains whether 500 PowerShell can run at the same time ...

Knowing me means, that I avoid coding as long as possible...

So I have choosen anotehr way.

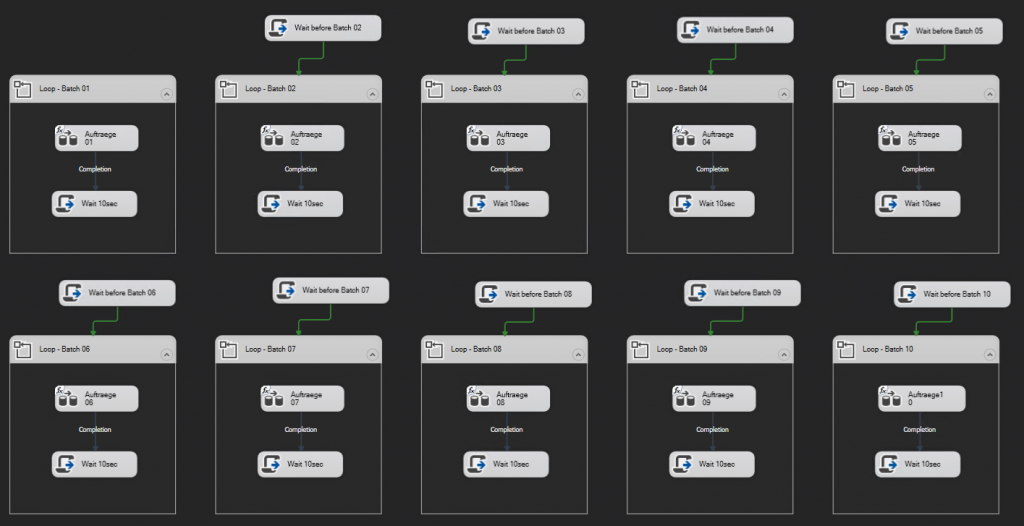

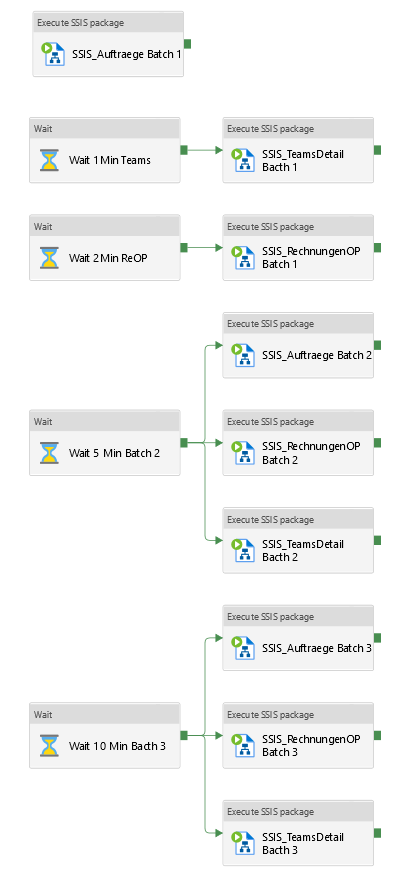

Integration Services (SSIS)

Since SSIS is already used in the project and SSIS is a good old friend of mine, I built three packages for the three different technical reports / queries, rotating 10 filters in a loop with a 10 second pause and 10 more times parallelized, slightly delayed.

The effort was low, the infrastructure in the Azure data factory and the network connection was already available.

With the loops and parallelization you get a maximum of 30 simultaneous “users” with 3 different queries.

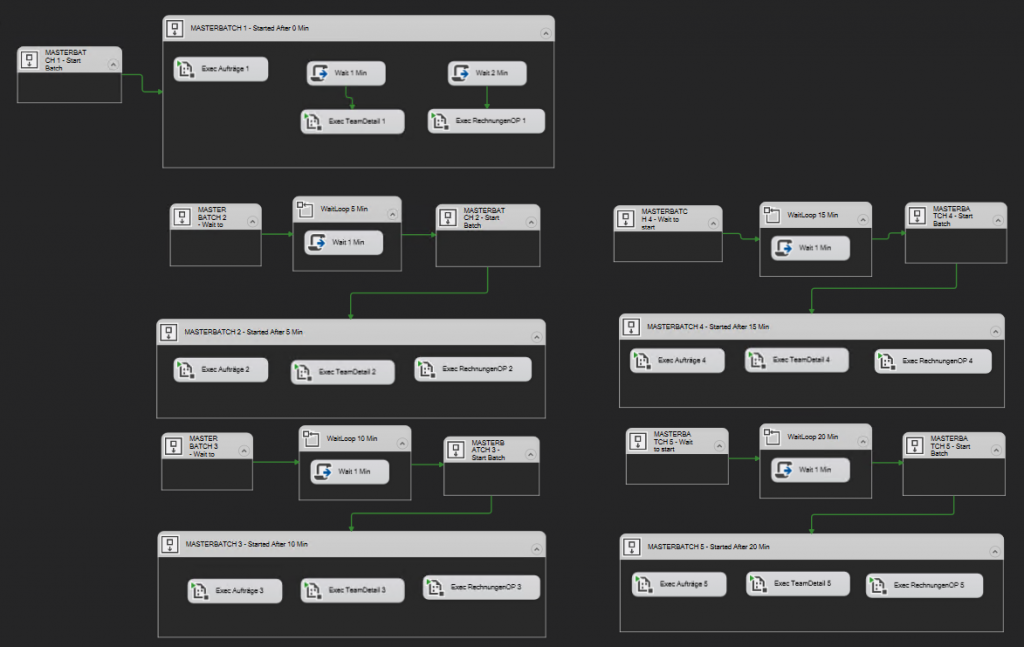

SSIS “Masteloop”

SSIS “Masteloop”

After the parallelized filter queries are running, you just have to multiply that, so I created a master package that calls this whole logic several times in such a way that it increases slowly.

That should bring you to 100 users.

But at this point nothing works anymore ...

Most jobs don't even start to run, everything is stuck, it becomes uncontrollable - because now the runtime environment is measured again instead of the reports.

The first measure is to boost the integration runtime properly from a D4 to a D16 with 4 nodes.

But that doesn't help either, because the problem also lies in SSIS, the time-controlled parallelization no longer works with the script tasks because the timing is not accurate and the package itself is so busy that the timers don't work.

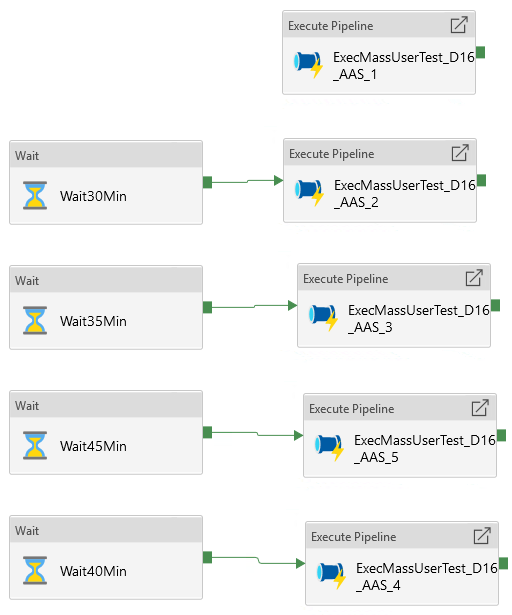

Azure Datafactory mit SSIS

Ich bleibe im Kern bei SSIS, denn dort kann man bequem DAX abfragen, aber die Steuerung verlagere ich in die ADF.

Azure Datafactory with SSIS

After 100 running in parallel for 2 hours without errors, I dare to encapsulate it again and to parallelize it several times, which theoretically leads to 750 simultaneous queries.

The result

After a few runs of 1 to 2 hours each, the logs can be analyzed.

If only it were that easy ...

More about this in Part 2…

Leave a comment